Hi 👋, I’m Zirui Song(/ˈziːˌruː.i/ /sɔːŋ/), I am a first-year PhD student in the NLP department at MBZUAI, supervised by Prof. Xiuying Chen and Prof. Xiaojun Chang . I received my Bachelor of Engineering (Honours) in Software Engineering with first-class honours from the University of Technology Sydney (UTS). Also I got the Dean’s List 2025 prize(Top 2% of students) from UTS. Before that, I was a member of UTS-NLP since Oct 2023 where I was fortuante to be advised by Prof. Ling Chen, and be mentored by Prof. Meng Fang. I am deeply appreciative of my mentor, Prof. Dayan Guan, who guided me into scientific research.

💻 News

2025-11-08: One paper was accepted by AAAI 2026.

2025-09-19: One paper was accepted by NeurIPS 2026.

2025-08-21: 3 papers (1 oral) were accepted by EMNLP 2025.

2025-07-25: I was admitted to the degree of Bachelor of Engineering (Honours) in Software Engineering with First Class Honours

2025-07-06: I won the Dean's List 2025 prize(Top 2% of students) from UTS

2025-07-02: One paper was accepted by ECAI 2026.

2025-05-16: One paper was accepted by ACL 2025.

2025-04-20: One paper was accepted by Nature Computational Science.

2025-03-01: “ MBZUAI, where I will commence my PhD studies in August 2025 here.”

2025-01-23: One paper was accepted by NAACL 2025.

2025-01-02: One paper was accepted by Communications Chemistry.

2024-09-25: First day as a visiting student at MBZUAI under the supervision of Prof. Xiuying Chen.

2024-09-20: One paper was accepted by EMNLP 2024.

2024-07-01: One paper was accepted by ECCV 2024.

Click to expand

2023-11-29: Prof. Ling Chen had accepted me as an undergraduate research assistant at Australia Artificial Intelligence Institute(AAII).

2023-07-01: I am honored to be selected as an international exchange student majoring in Softawre Engineering at UTS.

2023-05-18: Prof. Dayan Guan had accepted me as a remote undergraduate research assistant at ROSE Lab.

Academic Service

- Conference Reviewer: EACL 2026, ICLR 2026, AAAI 2026, EMNLP 2025, COLM 2025, ACM MM 2025, NeurIPS 2025, ACL 2025, NAACL 2025, ICME 2025,IJCAI 2025, EMNLP 2024,

- Journal Reviewer: IEEE Transactions on Artificial Intelligence (TAI)

💡 Research Interest

- Multimodal AI: My current research goal is to integrate multimodal information to improve the performance of large language models, at the same time, I am also seek for applications of multimodal models in Geolocation and Embodied AI domains.

- Trustworthy AI: I am also highly interested and experienced in exploring the Jailbreak and attack issues of Multimodal Language Models, particularly in the Vision and Audio modalities.

📖 Educations

-

2025.08->2029.05 (Expected) :PhD,

Mohamed Bin Zayed University of Artificial Intelligence(MBZUAI)

Mohamed Bin Zayed University of Artificial Intelligence(MBZUAI) -

2021.06->2025.05: B.E.,

University of Technology Sydney(UTS) QS Ranking: 88, U.S. News Ranking: 85. GPA: 3.90/4.00

University of Technology Sydney(UTS) QS Ranking: 88, U.S. News Ranking: 85. GPA: 3.90/4.00

📝 Publications

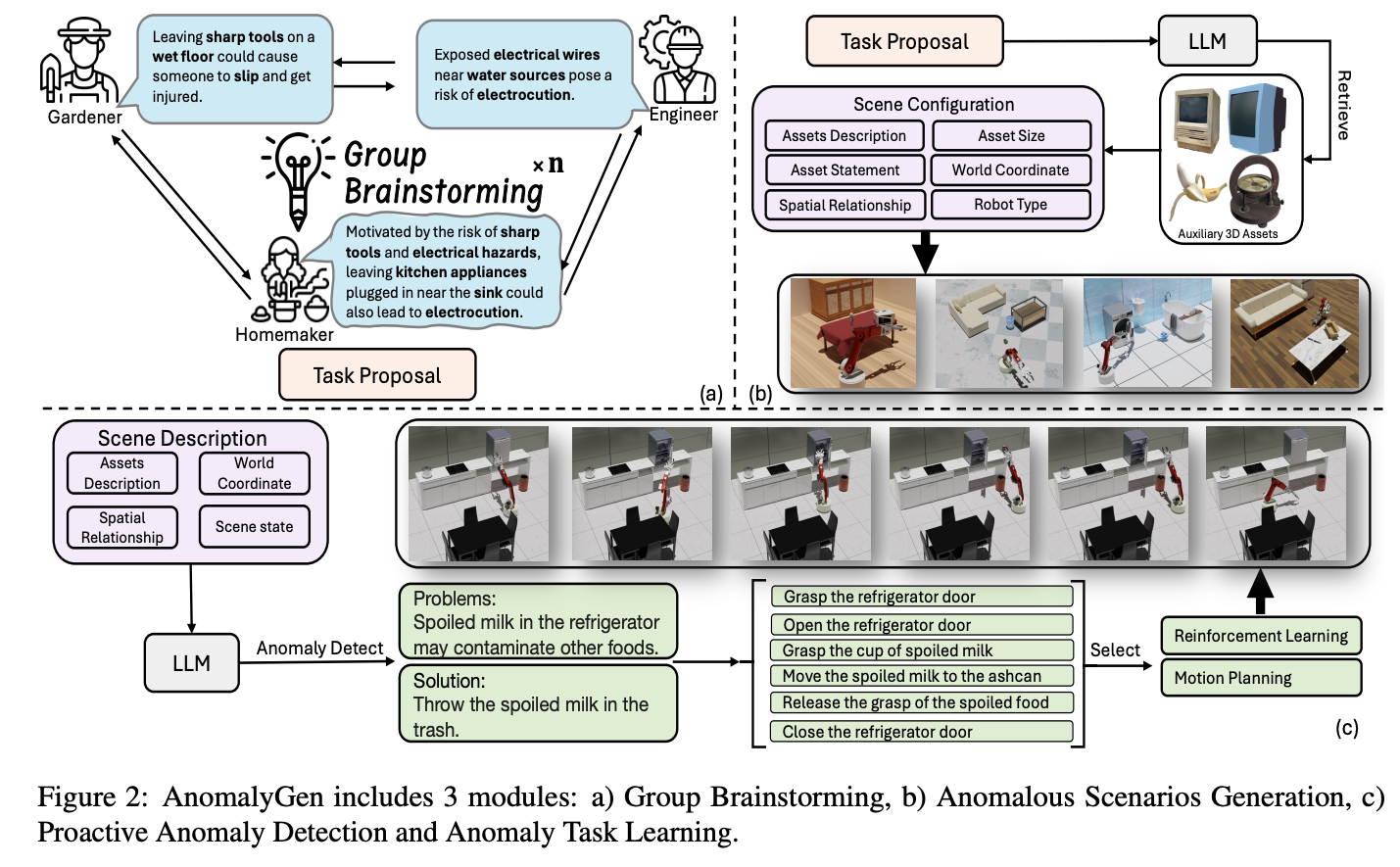

Hazards in Daily Life? Enabling Robots to Proactively Detect and Resolve Anomalies

Zirui Song*, Guangxian Ouyang*, Meng Fang, Hongbin Na, Zijing Shi, Zhenhao Chen, Yujie Fu, Zeyu Zhang, Shiyu Jiang, Miao Fang, Ling Chen, Xiuying Chen (*: first co-authors)

“AnomalyGen : A framework of anomaly vitrual scene data generation without human annotation, to enhance the robustness of robots.”

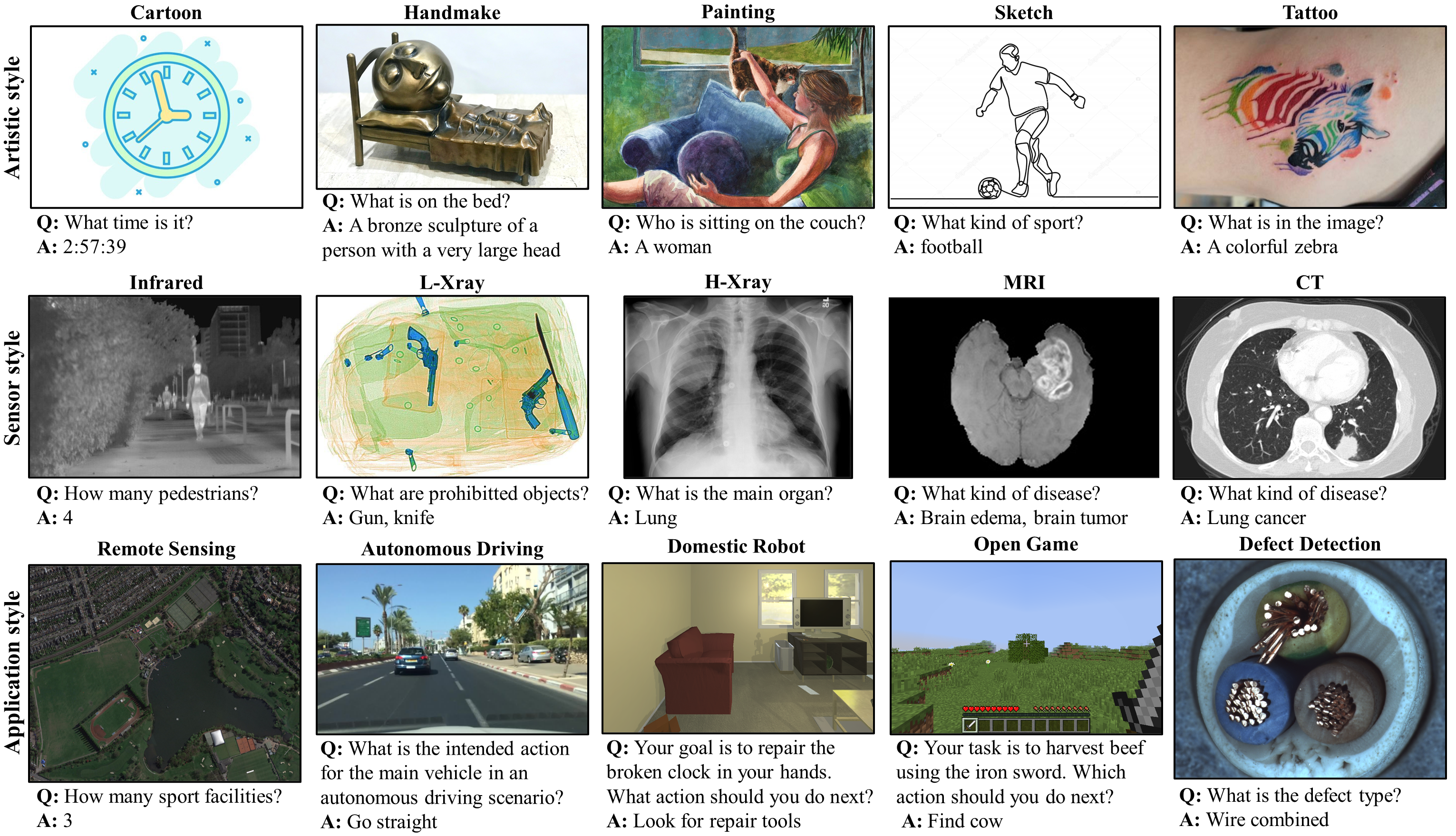

BenchLMM: Benchmarking Cross-style Visual Capability of Large Multimodal Models

Rizhao Cai*, Zirui Song*, Dayan Guan, Zhenhao Chen, Yaohang Li, Xing Luo, Chenyu Yi & Alex Kot (*: first co-authors)

“BenchLMM: A novel, comprehensive benchmark, specifically designed to investigate the cross-style capability of Large Multimodal Models (LMMs).”

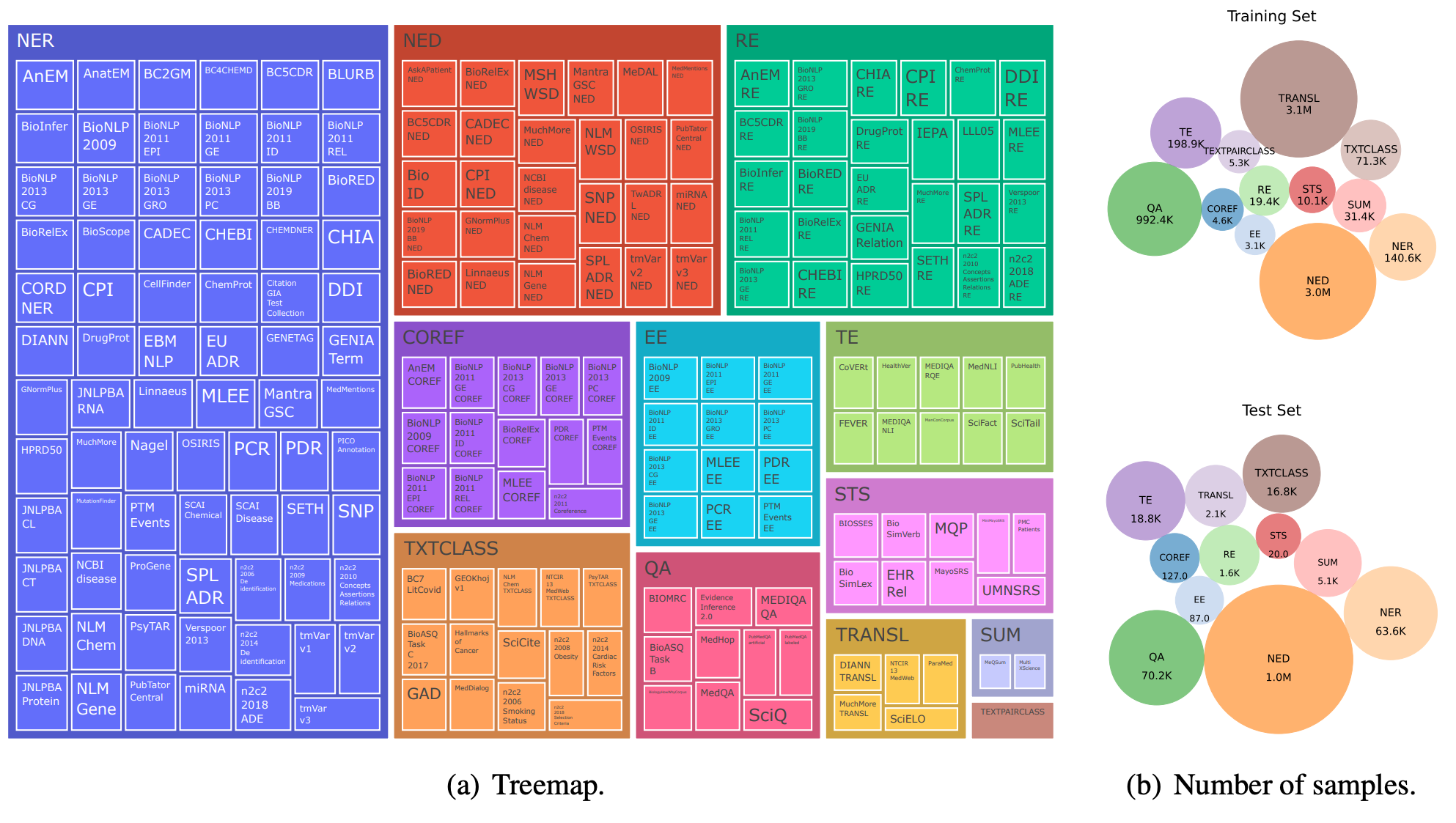

MedINST: Meta Dataset of Biomedical Instructions

Wenhan Han, Meng Fang, Zihan Zhang, Yu Yin, Zirui Song, Ling Chen, Mykola Pechenizkiy, Qingyu Chen

“MedINST, the Meta Dataset of Biomedical Instructions, a novel multi-domain, multi-task instructional meta-dataset. MedINST comprises 133 biomedical NLP tasks and over 7 million training samples.”

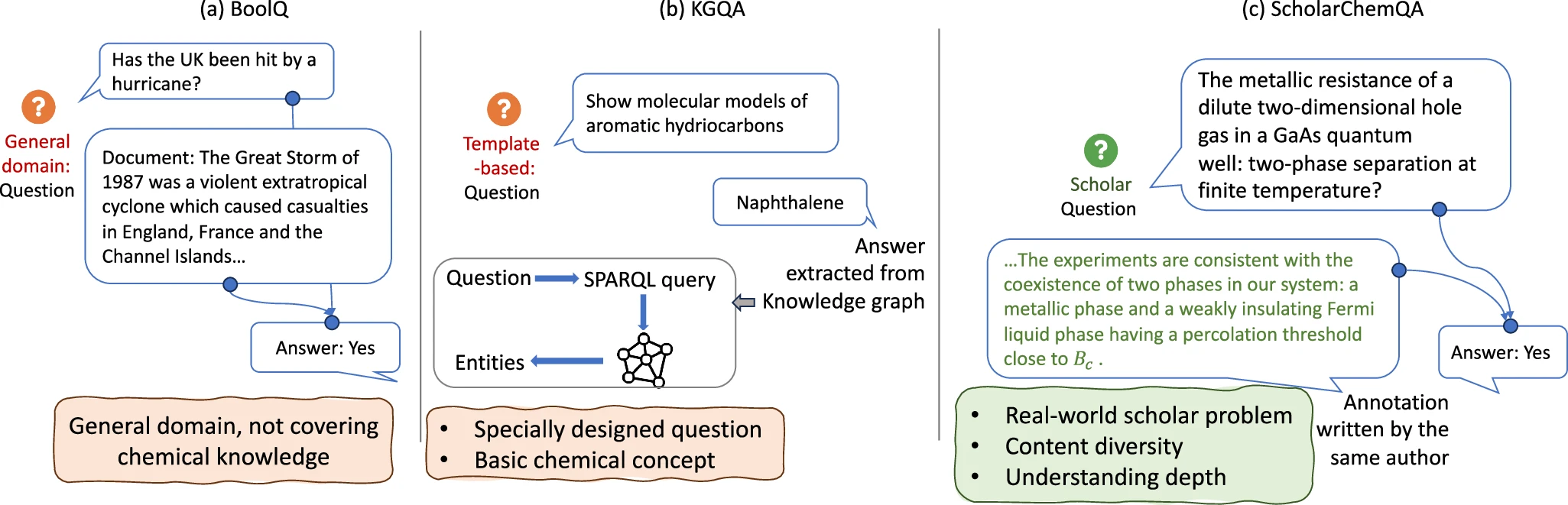

Unveiling the power of language models in chemical research question answering

Xiuying Chen, Tairan Wang, Taicheng Guo, Kehan Guo, Juexiao Zhou, Haoyang Li, Zirui Song, Xin Gao & Xiangliang Zhang

“ScholarChemQA, a large-scale QA dataset constructed from chemical papers.” Code

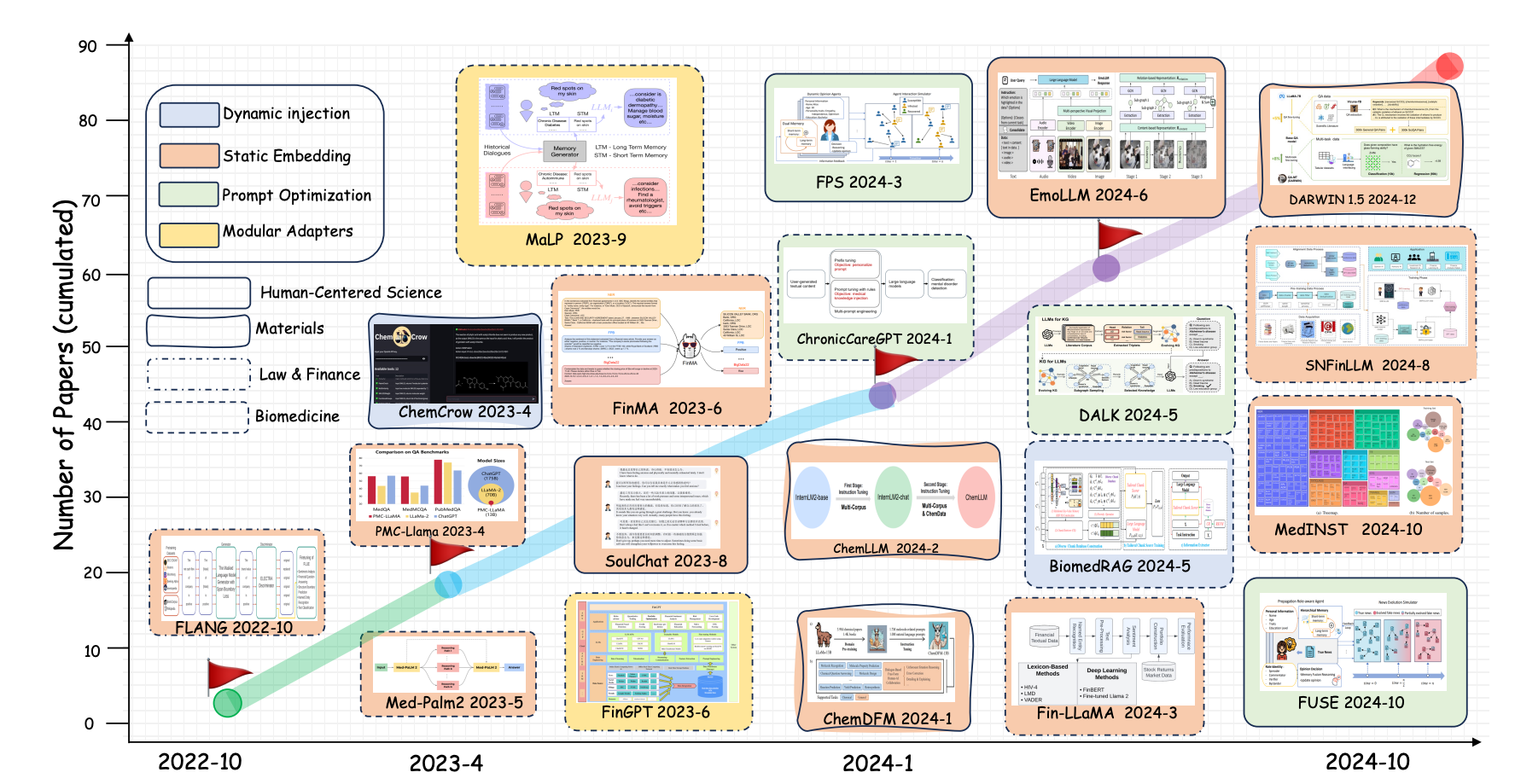

Injecting Domain-Specific Knowledge into Large Language Models: A Comprehensive Survey

Zirui Song*, Bin Yan*, Yuhan Liu, Miao Fang, Mingzhe Li, Rui Yan, Xiuying Chen (*: first co-authors)

“LLMs general-purpose nature often limits their effectiveness in domain-specific applications that require specialized knowledge, such as healthcare, chemistry, or legal analysis. To address this, researchers have explored diverse methods to enhance LLMs by integrating domain-specific knowledge. In this survey, we provide a comprehensive overview of these methods, which we categorize into four key approaches: dynamic knowledge injection, static knowledge embedding, modular adapters, and prompt optimization.”

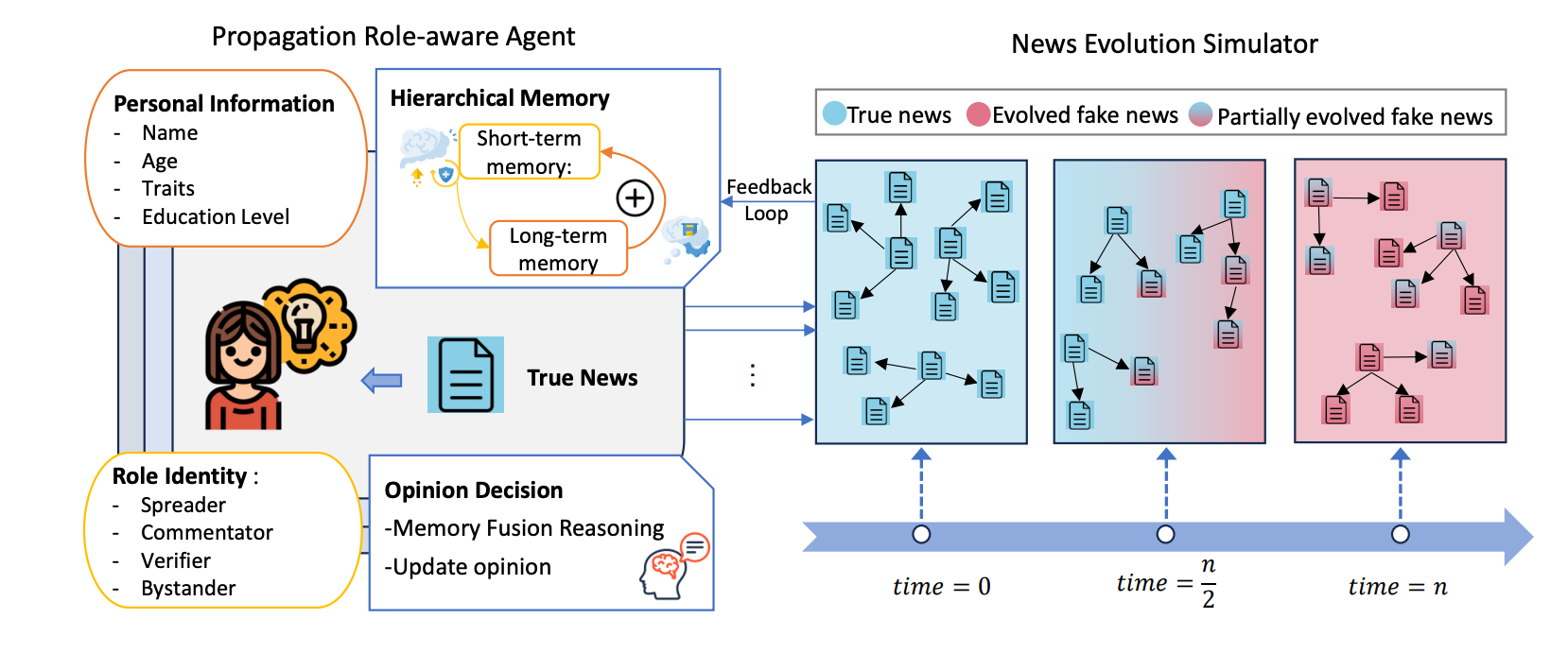

From a Tiny Slip to a Giant Leap: An LLM-Based Simulation for Fake News Evolution

Yuhan Liu,Zirui Song, Xiaoqing Zhang, Xiuying Chen, Rui Yan

“We take the first step toward simulating and revealing this evolution, proposing a Fake News evolUtion Simulation framEwork (FUSE) based on LLMs”

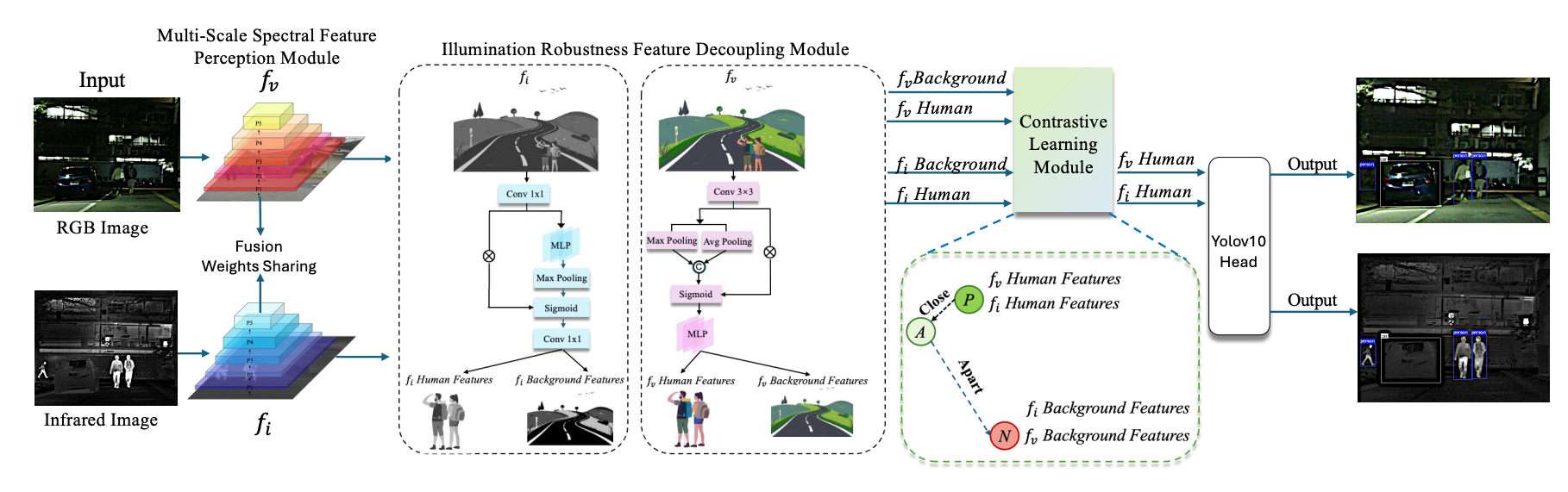

PedDet: Adaptive Spectral Optimization for Multimodal Pedestrian Detection

Rui Zhao*, Zeyu Zhang*, Yi Xu, Yi Yao, Yan Huang, Wenxin Zhang, Zirui Song, Xiuying Chen, Yang Zhao (*: first co-authors)

“PedDet, an adaptive spectral optimization complementarity framework specifically enhanced and optimized for multispectral pedestrian detection”

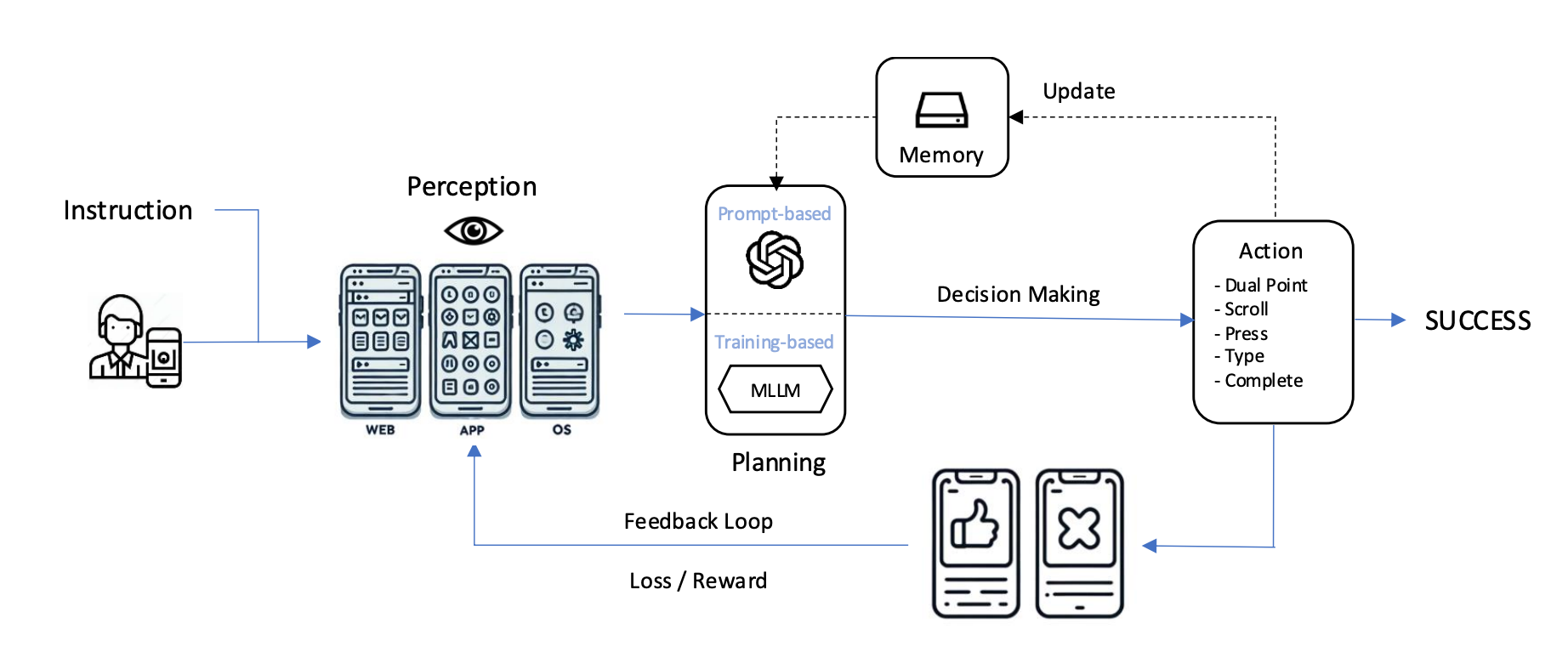

Foundations and recent trends in multimodal mobile agents: A survey

Biao Wu*, Yanda Li*, Meng Fang, Zirui Song, Zhiwei Zhang, Yunchao Wei, Ling Chen (*: first co-authors)

Note: The first author Biao Wu canceled my co-authorship during the second submission to ARR2025. And I have not received any explanation from him. But I think I still have credit for this work, and disagree with his decision.

💼 Experiences

- [2024.09 - now]

MBZUAI (Supervisor: Prof.Xiuying Chen,topic:Trustworthy MLLMs)

MBZUAI (Supervisor: Prof.Xiuying Chen,topic:Trustworthy MLLMs) - [2023.10 - 2025.02]

University of Technology Sydney, Research Intern (Supervisor: Prof.Ling Chen and Prof.Meng Fang,topic: Multimodal Agents)

University of Technology Sydney, Research Intern (Supervisor: Prof.Ling Chen and Prof.Meng Fang,topic: Multimodal Agents) - [2023.03 - 2024.01]

Nanyang Technological University, Research Intern (Supervisor: Prof.Dayan Guan,topic: Multimodal LLMs)

Nanyang Technological University, Research Intern (Supervisor: Prof.Dayan Guan,topic: Multimodal LLMs)

🏆 Honors and Awards

- 🥈 Silver Medal, Kaggle - LLM Science Exam [51/2664], 2024

- 🥇 School Second Class Scholarship,2022

📚 Resources

Blogs

- [05/24] [Chinese] National Undergraduate Innovation Project Documentation. [Link]

- [03/24] [Chinese] Negative Transfer. [Link]

- [03/24] [Chinese] Mixture of Experts Explained. [Link]

- [01/24] [Chinese] EMNLP2020 Tutorial Notes (Topic: Explainable AI). [Link]

📜 References

You can find my full CV here (Latest update: Oct 14th, 2024).